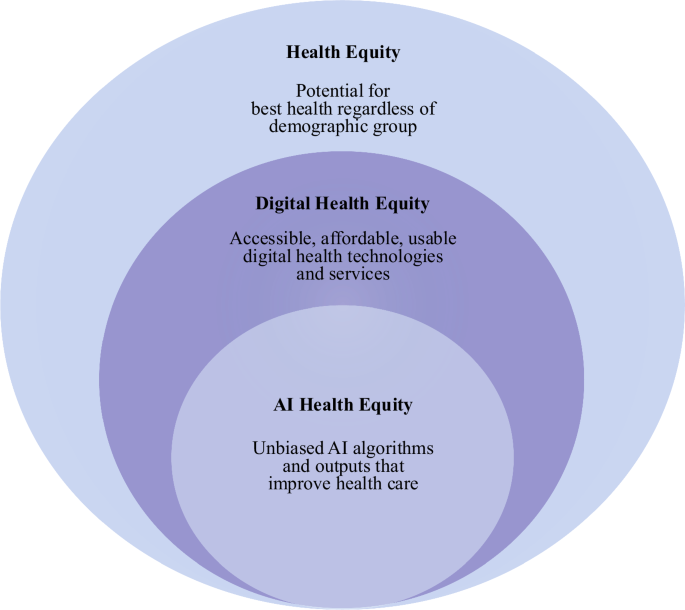

Medical artificial intelligence (AI) has the power to transform healthcare. From early diagnosis to individualized treatment plans, AI technologies are becoming an integral part of the practice of medicine today. But as we embrace this technological upheaval, a fundamental question is important: Are these technologies fair and accurate for everyone? Conquering gender bias in healthcare AI is imperative to make sure that progress reaches all patients equally.

When medical AI solutions ignore gender variations or supercharge existing inequalities, the effects go far beyond technical errors. They affect people: men, women, and gender-diverse individuals, who deserve medical care that recognizes their unique biology and personal experiences.

Why Gender Bias in Medical AI Matters

Consider a female patient who comes to her doctor with pain in her chest. A traditional AI model that has learned mainly from male patients’ data may misread her symptoms, causing a delayed or wrong diagnosis. Research indicates women experience symptoms of heart disease that differ from men, yet the AI systems sometimes fail to recognize this.

Gender bias in AI appears in many forms:

- Data skew: The majority of medical datasets in the past have understated women or gender minorities.

- Model design: Models tend not to account for sex-based biological variation.

- Evaluation metrics: AI performance is occasionally checked on non-diverse samples.

If these biases remain unchecked, healthcare providers will refer women for specialized treatments less often or offer less accurate treatment recommendations, widening health disparities.

Real-World Impact: Cases Showing the Cost of Bias

Consider the case of cardiovascular disease, the biggest killer globally. Women tend to complain of unusual symptoms like nausea or tiredness instead of the typical chest pain men experience. AI diagnostic programs trained primarily on male symptomatology can potentially ignore these signs.

Scientists demonstrated that some AI-driven cardiac risk assessments consistently underestimated women’s risk, according to the American Heart Association Task Force on Clinical Practice Guidelines. This link resulted in fewer preventive interventions and a higher likelihood of poor outcomes.

Similarly, oncology research reveals gender bias in cancer detection AI. For instance, breast cancer detection algorithms were trained on a few ethnic groups of women and may not generalize across groups, failing to detect early warning signs in minority women.

These findings demonstrate the essential need for diverse, representative data and gender-aware algorithms.

Strategies for Mitigating Gender Bias in Medical AI

The healthtech community is stepping up to this challenge with creative solutions targeting the development of more equitable AI systems.

1. Gathering Balanced and Inclusive Data

A starting point is creating medical data sets that truly reflect the gender diversity. This involves proactively recruiting women and gender-diverse participants into clinical trials and health studies. Healthcare providers collaborate with technology companies to anonymize and pool diverse patients’ data to train AI models on a wide range of cases.

For instance, Google Health’s artificial intelligence research groups have established frameworks to audit data sets and rebalance for gender skew before model training. This precaution enhances diagnostic accuracy between genders.

2. Incorporating Gender Awareness in Algorithm Design

Artificial intelligence developers now place greater focus on sex-specific biological indicators and symptoms during model development. By integrating recognized physiological disparities, e.g., hormonal cycles or heart rate variability, AI systems can provide more tailored insights.

Secondly, algorithms are tested on diverse demographic populations. Multidimensional testing ensures the instrument performs optimally not just on average, but in every subgroup.

3. Explainability and Transparency

Fixing gender bias in health AI also means making AI decisions transparent. Clinicians and patients should have a clue how AI reaches its recommendations, especially when the case involves gender-related factors.

Explainable AI tools enable users to check whether the system has included gender-based variables or if some biases could influence outputs. Transparency generates trust and also enables specialists to identify errors early.

4. Regulatory Oversight and Ethical Standards

U.S. and international healthcare regulators increasingly expect AI tools to prove fairness before approval. Regulators such as the FDA have also provided guidance on how to assess bias risk in AI-based medical devices.

Organizations like Partnership on AI encourage ethical frameworks that value inclusivity and equity in healthcare technologies.

Cutting-Edge Technologies Bridging the Gap

Some breakthroughs highlight how technology is narrowing gender bias in healthcare AI.

Federated learning: This method enables AI to learn from data across several hospitals without revealing confidential patient data. It enhances dataset heterogeneity without jeopardizing privacy.

Synthetic data generation: Employing AI to generate realistic, balanced datasets complements sparse female or minority data, making the model more fair.

Natural Language Processing (NLP): NLP models processing patient records and physician notes are being calibrated to identify gender-specific language and symptoms.

For instance, IBM Watson Health has recently tested an NLP platform that identifies gender inequities in patient records, enabling clinicians to modify care pathways in response.

Looking Ahead: The Path Toward Equitable Medical AI

The path from diagnosis to treatment driven by AI is rapidly changing. Eliminating gender bias from medical AI demands continuous effort from tech pioneers, healthcare professionals, and regulators.

Through the adoption of varied data, gender-sensitive design, transparency, and ethical norms, healthtech can develop AI technologies that benefit everyone. And healthcare technology, after all, is supposed to enhance humanity, not ignore it.

How will your organization play its part in this crucial change? The question is no longer if bias is present, but how well we mitigate it.

FAQs

1. Why is there gender bias in medical AI systems?

Gender bias is primarily the result of datasets that subrepresent women or gender-diverse populations and algorithms failing to recognize sex-based biological differences.

2. How do healthcare providers determine whether an AI tool is gender biased?

Providers can search for transparency reports, confirm AI performance across gender populations, and review independent audits or peer-reviewed articles.

3. Are there any rules guaranteeing fairness in medical AI?

Yes, the FDA demands proof of fairness and risk analysis for medical AI devices, and organizations worldwide are formulating ethics guidelines.

4. Can AI be used to decrease gender disparities in healthcare?

Yes. Properly designed and trained, AI can identify disparities, tailor treatment, and enhance diagnostic accuracy by gender.

5. How do patients contribute to ending gender bias in medical AI?

The patients can promote inclusive research, engage in clinical trials, and talk about AI-driven care decisions with healthcare providers.