Would you trust a machine to decide your diagnosis, or help your doctor do it faster and more accurately? For millions of patients across the U.S., this question isn’t theoretical anymore. AI is now embedded in everything from radiology scans to hospital chatbots. While the algorithms get smarter, the trust gap is growing. That’s why health systems and tech leaders must urgently ask: how to earn patient trust in AI‑driven healthcare systems?

In this article, you’ll uncover how to close that gap through transparency, clinician-led communication, ethical design, and real-world proof.

Whether you’re deploying AI in diagnostics or digital front doors, this is your playbook for building systems that patients not only use, but believe in.

Why Patient Trust Matters in AI‑Powered Care

AI has rapidly become a core part of modern healthcare, but public confidence hasn’t kept pace.

A pervasive trust gap

Recent research by Philips shows only about 59% of patients feel optimistic that AI could improve outcomes, compared to 79% of healthcare professionals.

Three out of four U.S. patients still don’t trust AI in healthcare settings. That highlights a real barrier.

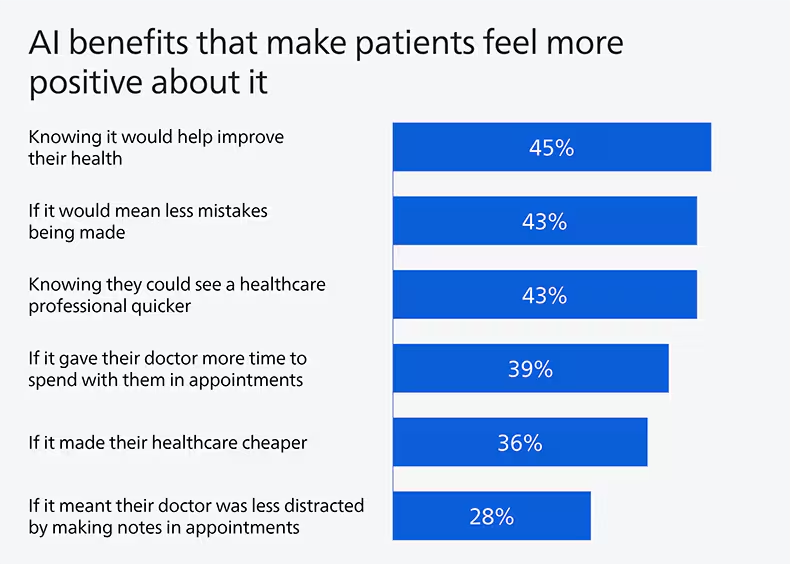

Trust links to perceived benefits

Another study of U.S. adults found that only 19.5% expect AI to enhance their relationship with their doctor, but 30% believe it can improve access to care. Trust in providers and systems strongly correlates with belief in AI’s benefits.

How to Earn Patient Trust in AI‑Driven Healthcare Systems

Earning trust is about showing patients that innovation still has a human heart. Here are key principles healthtech leaders can follow to foster confidence, empathy, and transparency in every AI-driven interaction.

1. Pair AI with human oversight

Patients trust clinicians, not algorithms. Philips, for example, ensures AI tools augment doctors, not replace them. In practice, conversational agents like “Mo” improved patient satisfaction and perceived empathy when overseen by physicians.

2. Be transparent about AI’s role

Clarity about how AI works is essential. Patients want to know when AI is used, how it reaches decisions, and what its limitations are. The AMA reports that physicians demand information on intended use, bias mitigation, and validation before they recommend or endorse AI tools.

3. Ground systems in fairness and safety

Surveys show key demographic groups, women, older patients, and less tech‑savvy individuals, are less comfortable with unexplained AI decisions. Safe systems must be explainable, regulatory‑compliant, and carefully audited.

4. Show evidence of reliability and benefit

Highlight real results. Stamford Health in Connecticut used an FDA‑cleared AI tool to detect breast artery calcification, finding a 17% increase in correlation to cardiac events, delivering dual value from mammograms. Meanwhile, Microsoft’s AI Diagnostic Orchestrator achieved 85.5% diagnostic accuracy, outpacing unaided physicians, yet was framed as a co-pilot, not a replacement.

5. Invest in inclusive education

Patients who are more familiar with AI are more likely to ask critical questions, but also more likely to trust the system when it’s explained clearly. Health systems should offer digestible patient education, with providers acting as translators of technical AI capabilities.

6. Embrace ethical design and oversight

At SAS Innovate 2025, the emphasis was on “response‑ability”, designing AI that’s not only technically sound but socially accountable from day one. Health leaders must integrate similar frameworks for trustworthy AI.

The Role of Governance in Trust-Building

Earning trust in AI‑driven healthcare systems isn’t just a technical or clinical responsibility; it’s a leadership mandate. Healthtech executives, hospital boards, and policy architects must treat ethical AI governance as a non-negotiable part of innovation.

According to the World Economic Forum’s 2025 insights on AI in global health, forward-thinking organizations are already embedding accountability frameworks into every AI deployment, from data sourcing and model training to real-time monitoring and patient consent protocols. These governance layers aren’t just safeguards. They’re signals to patients that their rights, dignity, and data are respected.

Successful leadership also means fostering cross-functional collaboration. When compliance officers, engineers, physicians, and patient advocates co-design AI workflows, the result is systems that reflect real-world concerns, not just ideal-world performance metrics.

Trust isn’t maintained by promises. It’s built on visible responsibility. When patients know someone is accountable, not just something, they’re far more likely to engage, adopt, and advocate.

Rebuilding Trust, One Human‑Centered Innovation at a Time

Healthcare is personal, and so is trust. As AI continues to accelerate diagnostics, automate workflows, and expand access, its true value will be measured not just in outcomes, but in confidence. Patients don’t fear technology; they fear being removed from the equation.

The path forward isn’t about choosing between humans or machines. It’s about designing systems where the two work in harmony. Where algorithms enhance clinical wisdom. Where transparency drives acceptance. And where patients feel seen, heard, and safe.

Earning patient trust in AI‑driven healthcare systems won’t happen with code alone; it requires courage, empathy, and leadership. For every CIO, healthtech founder, provider, or policymaker reading this: the future of AI in healthcare depends not just on what we build, but how deeply we care while building it.

Trust isn’t the byproduct of innovation; it’s the blueprint.

FAQs

1. Why don’t most patients trust AI in healthcare yet?

Healthcare is deeply personal. Many patients still fear that AI might replace human care or make decisions without empathy. Trust is harder to build when the “face” of care becomes a black box. That’s why pairing AI with human oversight is so critical.

2. Can AI ever be as trustworthy as a doctor?

AI can be accurate, but trust isn’t just about outcomes. It’s about communication, compassion, and accountability. When used as a tool to support, not replace, clinicians, AI becomes part of a trusted team, not a threat to it.

3. What’s the role of empathy in AI-powered care?

Empathy remains uniquely human, but AI can support it. For example, AI chatbots trained in empathetic language can ease anxiety during triage. Still, patients feel most reassured when a human clinician is involved in the loop.

4. How are leading healthcare systems building trust in AI?

By being transparent, inclusive, and ethical. Organizations like Philips and Mayo Clinic are designing AI systems with clinical oversight, clear patient communication, and data protection protocols, earning trust one decision at a time.

5. What can healthcare leaders do to close the trust gap?

Lead with values, not just technology. Trust grows when leaders prioritize patient safety, explainability, and accountability in every AI deployment. It’s not about showcasing what AI can do; it’s about proving it cares.

Dive deeper into the future of healthcare.

Keep reading on Health Technology Insights.

To participate in our interviews, please write to our HealthTech Media Room at sudipto@intentamplify.com