This is the kind of story that stays with you long after you’ve read the headline. A pregnant woman in the U.S., Natallia Tarrien, noticed an unusual tightness in her jaw. It wasn’t excruciating. It wasn’t something most would flag as a red alert. Still, she did what millions of people do every day: she asked ChatGPT for help. What came next would go viral and for good reason. In what’s now being called a breakthrough moment where ChatGPT saves an unborn baby, a simple AI prompt may have saved two lives.

The chatbot didn’t just offer general information. It zeroed in on something subtle yet critical: the symptom could point to high blood pressure or even preeclampsia. That prompt nudged her to check her vitals. Her blood pressure was dangerously high. Within hours, she was rushed to the hospital. The diagnosis? Preeclampsia. The intervention? An emergency delivery. The outcome? A healthy mother and a healthy baby.

This article explores:

- The real story behind the incident.

- Why preeclampsia remains one of the most serious, under-discussed pregnancy risks.

- The evolving role of conversational AI in digital health and where its limits lie.

- Strategic implications for health tech executives, clinicians, product teams, and investors.

- The future of AI-driven patient engagement in maternal and preventive care.

If you’re a health tech leader, hospital CIO, digital health founder, or simply a tech-forward parent-to-be, this moment matters to you. Because we’re not just talking about innovation, we’re talking about trust, timing, and the human instinct to ask a machine, “Should I be worried?”

And sometimes, the machine might just say, “Yes. Get help. Now.”

Hear The Story: How ChatGPT Saves Two Lives

In February 2024, Natallia Tarrien, a wellness coach and expectant mother from Florida, wasn’t feeling quite right. A persistent, unusual pressure in her jaw lingered. It didn’t fit the classic mold of a pregnancy symptom, but it was enough to spark concern. She turned to ChatGPT, not expecting much, perhaps reassurance, or a casual note on muscle strain. Instead, what she got was a wake-up call.

ChatGPT flagged the possibility of elevated blood pressure and preeclampsia, a serious pregnancy complication that often goes unnoticed until it’s dangerously advanced. Prompted by that suggestion, Natallia checked her blood pressure with an at-home monitor. The numbers were alarming. Shortly after, she was in an ambulance en route to the hospital. There, clinicians diagnosed her with preeclampsia. An emergency C-section followed. Her son was delivered safely.

Had she waited even a few more hours, the outcome might have been very different. Her case has since gone viral, making headlines globally, from India’s Economic Times to U.S.-based sites like Newsweek, Parents.com, and Motherly.

This is what the phrase “ChatGPT saves an unborn baby” now symbolizes: a single nudge from AI, leading to timely human intervention that ultimately saved lives.

“ChatGPT told me, ‘This might be a sign of preeclampsia. Go check your blood pressure now.’ That sentence probably saved my baby’s life and mine,” said Natallia Tarrien, via Instagram.

The significance of this story lies not just in the outcome but in its quiet, everyday setting. There was no hospital tech, no wearable gadget, and no digital triage dashboard. Just a mother, her smartphone, and a well-timed AI suggestion. That simplicity is exactly what makes it so powerful and, for health-tech leaders, so urgent to understand.

Why This Moment Resonates with Patients and Professionals Alike

What makes this case different from past “AI saves the day” narratives is its relatability. It wasn’t a rare disease diagnosis or an edge-case prediction in a lab. This was maternal health, a space where delayed care, especially for preeclampsia, can escalate in hours.

And it struck a nerve. Clinicians have long warned about the subtleties of preeclampsia symptoms. According to the American College of Obstetricians and Gynecologists (ACOG), up to 8% of all pregnancies in the U.S. are affected by preeclampsia, and it remains a leading cause of maternal mortality.

Symptoms like jaw tension, blurry vision, or mild headaches can appear benign, but the consequences of missing them are anything but. That’s what this story crystallizes: AI doesn’t need to be perfect, it just needs to prompt attention at the right moment.

Clinical Caution: ChatGPT Didn’t Diagnose

ChatGPT didn’t run a blood test. It didn’t monitor heart rate variability or scan an EHR. It offered a probabilistic suggestion based on symptom descriptions. That’s not the same as a diagnosis. What it did, though, was create a bridge: between intuition and action, between concern and clinical validation.

In AI terms, this is called decision support, not decision-making. And that distinction matters. Because if we blur the lines, we risk overpromising what AI tools can do and under-preparing patients for when they shouldn’t rely on them.

Yet, even within those boundaries, this case illustrates AI’s potential to reduce the latency between symptom onset and care.

For Healthcare Leaders: A Defining Use Case

If you’re a hospital CIO, chief medical officer, or investor in digital health platforms, this story is a case study in behavior change at scale.

Think about it:

- A non-clinical tool prompted timely clinical action.

- A common device (smartphone) became a point-of-care decision accelerator.

- A free AI tool outpaced passive symptom monitoring apps by offering directional insight.

For organizations exploring AI-driven patient engagement, this moment isn’t an anecdote; it’s a north star. And the challenge now is to design platforms that enable more moments like this, while keeping guardrails, privacy, and clinical accuracy intact.

Preeclampsia Is A Silent Risk Hiding in Plain Sight

If there’s one lesson to extract from this incident, it’s this: even subtle symptoms can conceal life-threatening conditions. And few conditions exemplify this better than preeclampsia.

Preeclampsia is a hypertensive disorder that affects pregnant individuals, typically after 20 weeks of gestation. It can cause high blood pressure, organ damage, and premature birth, and if left untreated, it can be fatal to both mother and child.

What makes it particularly dangerous is its ability to hide in plain sight. Mild headaches, jaw tension, swelling, and blurred vision, symptoms that could easily be dismissed, can signal escalating maternal distress.

That’s why so many medical professionals describe preeclampsia as a “silent killer.” Because it doesn’t always announce itself with pain or drama. Often, it’s just a quiet shift in the body, invisible until it’s too late.

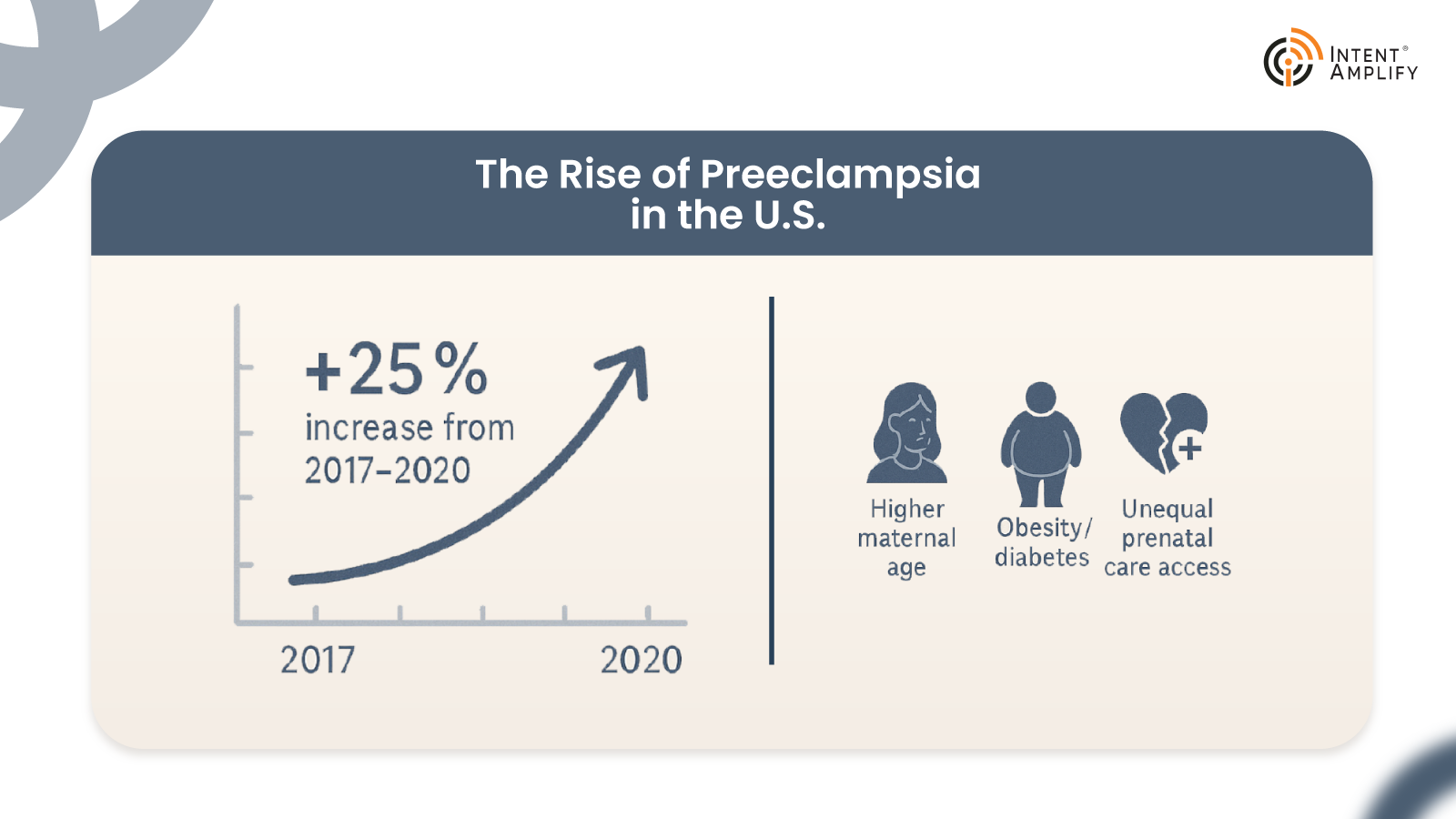

A Rising Concern in the U.S.

In recent years, preeclampsia rates have surged in the United States. According to the Centers for Disease Control and Prevention (CDC), the rate of hypertensive disorders during pregnancy increased by 25% between 2017 and 2020. Today, it affects 1 in every 12 pregnancies. The rise is driven by a mix of factors:

- Higher maternal age.

- Increased obesity and diabetes rates.

- Racial and socioeconomic disparities in access to prenatal care.

Black mothers, in particular, are 60% more likely to develop preeclampsia and three times more likely to die from its complications compared to white mothers, as noted by the Preeclampsia Foundation.

The data is as troubling as it is clear: preeclampsia is not rare. And our systems are not catching it early enough.

Traditional Monitoring Isn’t Enough

Historically, prenatal care has relied on periodic in-clinic blood pressure checks, patient-reported symptoms, and routine screenings. But the gaps between appointments, especially in rural or underinsured populations, can stretch weeks. For a condition like preeclampsia, where changes can occur overnight, that’s a dangerous delay.

This is where digital health tools and AI-based symptom checkers have begun to make their case. While they’re not replacements for diagnostic tools, they can act as always-on companions, triaging concerns, raising red flags, and guiding patients to act sooner rather than later. When platforms like ChatGPT suggest checking blood pressure in the presence of subtle symptoms, they can essentially serve as digital “early warning systems.”

As we saw in Natallia Tarrien’s case, that small nudge like “Check your blood pressure” was the difference between emergency care and potential tragedy.

And that brings us to the bigger picture: when ChatGPT saves an unborn baby, it becomes part of the public conversation, raising not just hope but responsibility.

What Healthtech Leaders Need to Acknowledge

For digital health platforms, this moment highlights a glaring opportunity. There is demand: real, emotional, urgent demand, for AI tools that can help people recognize serious conditions sooner. The question is: can the ecosystem meet that demand safely?

A few things are clear:

- AI models need pregnancy-specific training datasets. Preeclampsia symptoms often differ across populations. Building models that reflect those differences is a necessity.

- Wearables and home-monitoring tools must be integrated into AI workflows. Combining real-time blood pressure readings with AI-generated risk alerts could redefine at-home prenatal safety.

- Equity must be front and center. Bias in training data, lack of language support, and inconsistent access to technology are all barriers that can widen maternal health gaps if not addressed head-on.

And beyond product design, it’s about trust. If a patient is going to listen to a chatbot over Google search results, they need to feel confident that the system prioritizes their safety, privacy, and dignity.

A Call to Real-World Integration

Organizations like Northwell Health and Mayo Clinic are already investing in AI-powered maternal care solutions, some using natural language processing (NLP) to monitor for early signs of distress. But these are still exceptions, not norms.

Imagine a future where:

- Chatbots are trained in OB/GYN scenarios.

- Symptom prompts are verified against real patient-reported data.

Clinicians are alerted when patients receive AI-flagged warnings. - Remote BP monitors sync directly with patient portals and AI care guides.

This could transform healthcare systems and prevent hundreds of thousands of emergency visits, birth complications, and even deaths each year.

What AI Got Right, and Where It Needs Guardrails

The phrase “ChatGPT saves an unborn baby” has taken on a symbolic role in healthcare circles, but its resonance goes far deeper than just a catchy headline. It represents a complex intersection of artificial intelligence, patient empowerment, and digital trust. But while the story gives us a reason to celebrate, it also gives us a reason to pause. Why? Because while ChatGPT got it right in this case, it just as easily could have gotten it wrong.

And that’s where health-tech leaders must lean in, not with fear, but with responsibility.

So, What Did ChatGPT Do?

Let’s be precise. In the Tarrien case, ChatGPT:

- Processed a non-specific symptom (jaw pressure).

- Cross-referenced potential causes, including serious ones like hypertension.

- Suggested that the symptom could be linked to a condition like preeclampsia.

- Encouraged a course of action: checking blood pressure.

It didn’t diagnose. It didn’t triage in the clinical sense. But it did fulfill the role of a conversational nudge engine, one that helped a patient transition from passive discomfort to proactive action.

This is known in health tech as non-clinical decision support: a space where AI can engage users, raise awareness, and recommend next steps, without pretending to replace doctors.

The Rise of Non-Diagnostic AI in Healthcare

Generative AI tools like ChatGPT are not FDA-cleared medical devices. But they’re increasingly acting as first points of contact in patient journeys. People use them to ask everything from “Is this rash serious?” to “Should I go to urgent care or wait?” and that behavior is growing.

A McKinsey report on digital front doors found that over 37% of U.S. adults had used AI chatbots for preliminary health questions within the last 6 months. Of those users, 74% said the interaction influenced their next step, whether it was seeking care, scheduling an appointment, or self-monitoring at home.

In that sense, tools like ChatGPT are quietly becoming digital gatekeepers, not clinicians, but guides. And that matters.

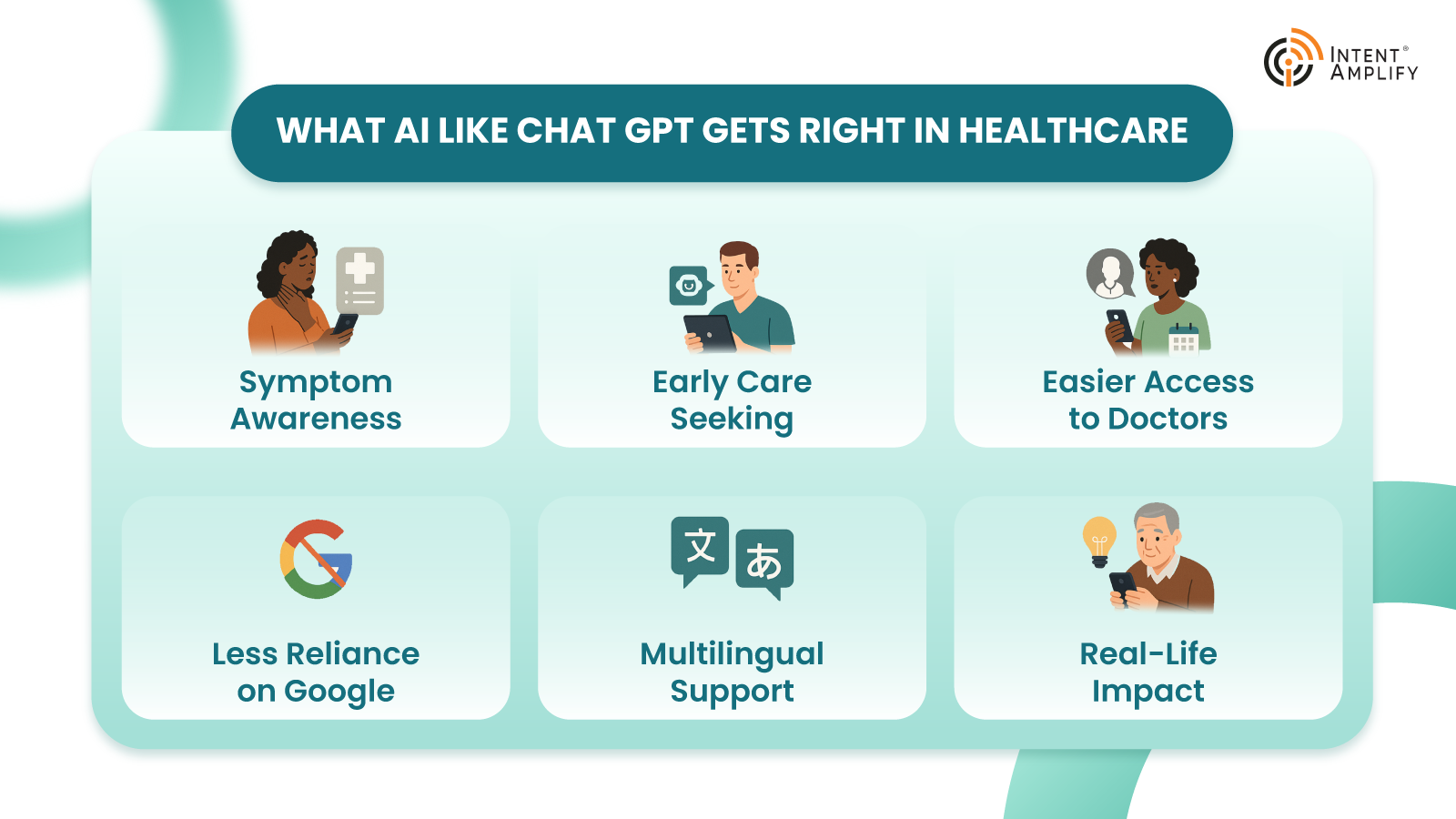

Strengths: What AI Like ChatGPT Gets Right

When used thoughtfully, ChatGPT and similar large language models (LLMs) can:

- Increase symptom awareness for underserved or busy populations.

- Encourage earlier care-seeking behavior, especially when symptoms seem minor.

- Remove friction from the decision to consult a healthcare provider.

- Reduce reliance on “Dr. Google” and questionable web forums.

- Support multilingual access, aiding non-native speakers in navigating health topics.

In the Tarrien case, these strengths came together at just the right moment.

Limitations: Where Guardrails Are Needed

Large language models don’t “know” in the human sense. They predict language patterns based on training data.

This means they might:

- Suggest incorrect or overly broad possibilities.

- Miss rare or atypical symptom clusters.

- Lack of access to personal history, vitals, or lab results.

- Offer emotionally neutral responses in emotionally charged situations.

That last point matters more than it seems. When a pregnant woman asks a chatbot about a strange symptom, the tone of the response can affect whether she feels reassured or dismissed. Too clinical? She may ignore the suggestion. Too alarming? She may panic unnecessarily.

That’s why prompt engineering and empathy modeling in AI training are now seen as critical components of safe implementation.

Another concern is liability. If a patient acts or fails to act based on AI-generated content, who is responsible? The platform? The developers? Or the healthcare provider who recommended its use?

The legal landscape is still evolving, but one thing is certain: transparency must be baked into every user interaction. That includes clear disclaimers, visible referral suggestions (“speak to a doctor if…”), and data privacy protections.

Building Ethical and Effective Clinical-AI Ecosystems

To move forward responsibly, we need to view cases like Tarrien’s not as flukes, but as testbeds for a more integrated AI future.

Here’s what health tech leaders and clinical executives should be prioritizing:

1. AI-Augmented, Not AI-Driven

Use AI to support, not replace, clinical insight. The most effective models will blend algorithmic suggestions with clinician oversight and escalation protocols.

2. Interoperability with Real-World Data

AI tools must integrate with devices like smart BP monitors, wearable trackers, and patient-reported outcomes platforms to deliver context-aware guidance.

3. Training on Verified Clinical Corpora

Large models need guardrails and medical training sets vetted by specialists, not just publicly scraped health articles.

4. Ethical UX Design

Ensure AI platforms use inclusive, sensitive language; flag uncertainty where it exists; and offer non-directive, patient-centered guidance.

5. Transparency and Consent

Let users know what AI can and cannot do. Ensure patients understand that ChatGPT is not a doctor, even when it feels like one.

A Case for Scalable Innovation with Safety First

It’s tempting to treat the Tarrien case as lightning in a bottle, one story where everything aligned. But that’s a missed opportunity. What if we could design AI tools that intentionally created more of these outcomes?

The foundation exists:

- The technology is scalable.

- The models can be fine-tuned.

- The interfaces are already in people’s pockets.

Now, it’s up to industry leaders to develop systems where moments like “ChatGPT saves an unborn baby” aren’t rare. They’re repeatable.

ChatGPT’s nudge shows what digital care can do

The story of how ChatGPT saves an unborn baby is a mirror reflecting where healthcare is headed. When AI listens closely, responds responsibly, and nudges us to act in time, it becomes a companion in care. But this future won’t build itself. It demands bold leadership, thoughtful design, and unwavering commitment to patient safety.

The question is no longer if AI belongs in maternal care; it’s how we ensure every life has the same chance that Natallia and her baby did.

FAQs

1. How is AI like ChatGPT being used in maternal healthcare?

AI tools are increasingly used to support early detection of conditions like preeclampsia by prompting symptom checks, improving patient education, and aiding digital triage, without replacing clinical judgment.

2. Can AI improve early intervention in pregnancy complications?

Yes. AI can flag subtle symptoms and encourage timely action, reducing the delay between symptom onset and diagnosis, critical in complications like preeclampsia or gestational hypertension.

3. What are the risks of using generative AI tools in patient care?

Generative AI isn’t diagnostic and may offer incomplete or misinterpreted information if not properly governed. Guardrails, disclaimers, and clinician oversight are essential.

4. How should healthcare systems integrate AI chatbots safely?

Health systems should use AI for symptom education, decision support, and engagement, not diagnosis while ensuring models are trained on diverse, clinically verified datasets.

5. What does the “ChatGPT saves an unborn baby” story teach health-tech leaders?

It highlights the urgent need for AI tools that balance accessibility with responsibility, supporting early care-seeking behavior while protecting clinical integrity and patient trust.

Dive deeper into the future of healthcare.

Keep reading on Health Technology Insights.

To participate in our interviews, please write to our HealthTech Media Room at sudipto@intentamplify.com