By 2030, what many vendors now label AI-powered care will sit underneath core delivery models, reimbursement logic, workforce design, and patient engagement. Not a feature. A foundation.

AI isn’t just another disruptive technology in healthcare. It’s on track to reshape core delivery models, redefine competitive advantage, and expose the limits of governance frameworks that weren’t built for this scale of machine decision-making.

Start with scale. The global AI health sector is projected to grow explosively, from roughly $11 billion in 2021 to near ten-figure valuations by 2030, reflecting adoption across diagnostics, administrative automation, decision support, and remote monitoring.

Leading practice communities are already prioritizing operational maturity around AI-driven models, reinforcing that AI-powered care is now both a clinical and commercial imperative rather than a speculative trend. That context grounds your analysis in real-world executive education and industry dialogue.

That kind of expansion explains why most health systems have pivoted from curiosity to deployment. But it also explains why the strategic implications for boards and executives are so uncomfortable.

AI’s Promise Meets Structural Constraints

At the tactical level, AI’s value proposition is credible: better pattern recognition in imaging and genomics, faster interpretation of unstructured notes, more precise staffing forecasts, earlier identification of risk through predictive models, and automated patient communications. Yet, the gap between early wins and systemic transformation is vast.

To leverage AI meaningfully, you need interoperable data, rigorous validation pipelines, and multidisciplinary governance, and most health systems still struggle with basic interoperability and data quality. You can layer algorithms over bad data and get bad outputs faster. That’s not a philosophical point; it’s a practical failure mode people are living with today.

Investments that simply bolt AI onto legacy infrastructures without shoring up fundamentals risk marginal gains at best, wasted budgets at worst.

The Equity Paradox: Amplifier of Gaps

Public health institutions and global bodies like the WHO are right to emphasize AI’s potential for universal impact, as disease surveillance, outbreak response, chronic disease management, and system forecasting can all benefit.

Digital infrastructure is uneven. High-income systems are accelerating ahead; middle- and low-income settings still struggle to digitize records, secure data pipelines, and recruit digital talent. AI doesn’t bridge these gaps; it accentuates them.

Without deliberate public policy and targeted investments, AI will make the rich systems richer and leave others further behind. It’s the technological equivalent of the Matthew Effect. AI-powered care becomes a luxury good.

Within wealthy nations, the inequity is already manifesting. Studies now show that widely used AI tools downplay symptoms in women and ethnic minorities and provide skewed clinical recommendations because the underlying data reflects centuries of systemic bias.

The moral imperative here is not abstract. It’s about who actually benefits, and who is harmed, when algorithms touch real people.

Governance Gaps and Regulatory Fragmentation

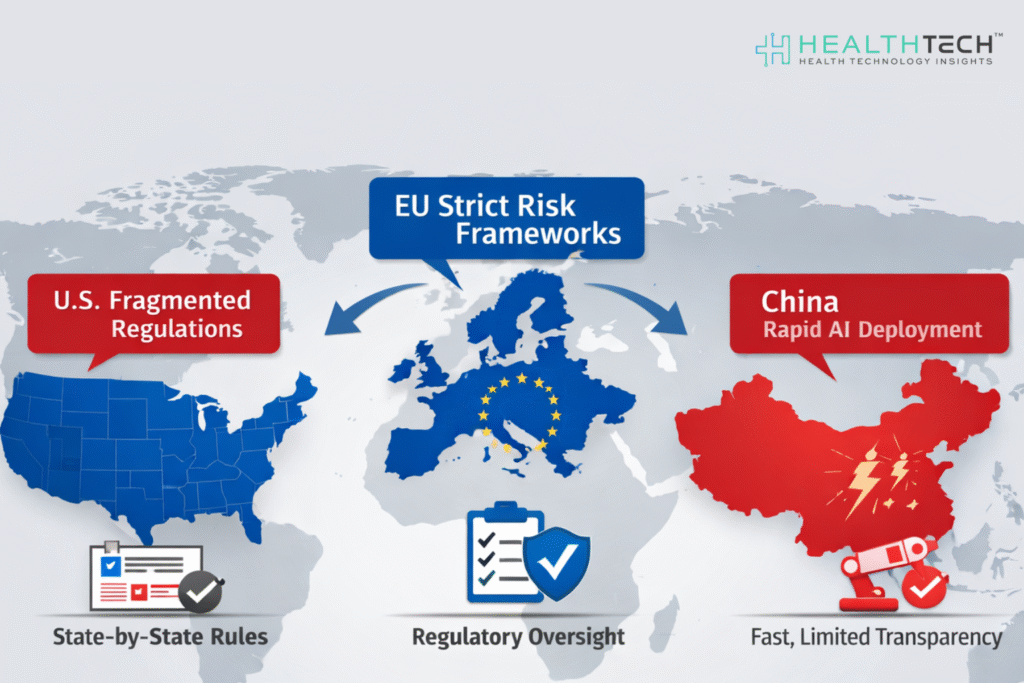

Regulation is trying to catch up. Efforts like the EU’s AI risk frameworks and international conventions on AI and human rights (with principles of transparency, accountability, and non-discrimination) are steps forward. But these frameworks are nascent and voluntary in many cases.

The U.S. healthcare landscape is more fragmented, driven by sectoral rules and state initiatives rather than cohesive federal governance.

International comparisons show divergent approaches: structured risk assessments in the EU, decentralized sectoral rules in the U.S., and rapid deployment in China with limited transparency.

That fragmentation matters. Without harmonized regulation, vendors can deploy inconsistent standards, clinicians can be left holding liability when AI recommendations fail, and patients bear risk with little recourse. Law and liability may evolve, but the window between widespread use and clear legal frameworks could span years.

Risk, Trust, and the “Black Box” Dilemma

AI’s technical promise belies deep ethical and epistemic challenges. Complex models, especially large-scale language and multimodal models, can be inscrutable, a “black box.”

A “black box” model is one where inputs and outputs are visible, but the internal logic is opaque, even to its creators. In healthcare, opacity isn’t just an engineering inconvenience. It undermines clinical trust. If a tool recommends a course of action without explainability, clinicians and patients are left with “trust me” assurances from a machine. That’s not sustainable in high-stakes contexts.

Transparency, and the absence of it, also shapes patient outcomes. When models cannot explain their reasoning, errors can go undetected, and unintended biases remain hidden. This isn’t a theoretical risk. It’s central to whether AI augments judgment or undermines it.

Workforce Impacts and Organizational Tension

AI isn’t ready to replace clinicians, but it will disrupt roles. Some administrative tasks will disappear. New roles in data curation, model governance, and digital service design will emerge. That shift creates tension.

Who owns AI skills? IT? Clinical operations? Quality and safety?

Without clear structures, AI initiatives become patchworks instead of strategic pillars.

There’s another tension: early adopters may attract talent and improve CE outcomes, while laggards struggle to compete for both workforce and performance.

Strategic Imperatives for Leaders

If you are an investor,

Invest early in AI risk frameworks that integrate security, privacy, and governance. Treat AI systems as evolving risk surfaces, not static assets.

If you are a healthcare leader,

Prioritize areas where value intersects with risk mitigation, clinical decision support with rigorous validation, and population health insights that drive measurable outcomes.

Across the executive suite,

Insist on performance metrics tied to patient outcomes, not implementation milestones.

Closing Reality Check

AI will reach deep into healthcare by 2030. Systems that embrace it superficially will see incremental improvements. Those that embed it strategically, aligned with governance, equity, and operational rigor, can achieve meaningful and lasting transformation.

The clearest divide won’t be digital vs analog. It will be between organizations that can balance innovation with stewardship and those that can’t. In practice, that’s the real strategic gap leaders must close if they want AI to be a tool of better care, not a mirror of systemic inequities.

FAQs

1. What does “AI-Powered Care” actually mean for a health system, beyond automation?

It means embedding AI into clinical and operational decisions, not just back-office tasks. Think triage support, risk stratification, capacity planning, and real-time care guidance. When AI influences outcomes and reimbursement, not just workflows, it becomes AI-powered care.

2. Where should hospitals start investing in AI to see measurable ROI fastest?

Start with high-friction, high-volume processes: documentation, coding, scheduling, and readmission prediction. These areas reduce cost and burnout quickly. Clinical AI without clean data and stable operations usually underperforms.

3. What are the biggest risks leaders underestimate when adopting AI in healthcare?

Data quality, liability, and security exposure. Poor inputs degrade models, unclear accountability complicates harm cases, and new integrations expand cyber risk. Most failures come from governance gaps, not bad algorithms.

4. How does U.S. regulation differ from the EU and China for healthcare AI?

The U.S. relies on fragmented, sector-specific rules and state policies. The EU uses structured risk classifications and centralized oversight. China prioritizes rapid deployment with less transparency. That divergence creates compliance complexity for vendors and providers operating globally.

5. Will AI reduce workforce pressure or create new skill shortages?

Routine admin work declines, but demand rises for data governance, clinical informatics, and AI oversight roles. Systems that reskill staff early gain productivity. Those who don’t face new bottlenecks instead of relief.

Dive deeper into the future of healthcare. Keep reading on Health Technology Insights.

To participate in our interviews, please write to our HealthTech Media Room at info@intentamplify.com