Generative AI (GenAI) in healthcare has shifted from “cool demos” to operational systems that reduce admin burden, improve patient access, and accelerate research—while raising the bar on privacy, governance, and clinical safety.

What began as experimental chatbots and pilot projects is now becoming core infrastructure—powering clinical documentation, patient access, revenue cycle operations, drug development, and medical research at scale. Health systems, life sciences companies, and digital health vendors are no longer asking if generative AI belongs in healthcare, but where it delivers measurable impact without compromising safety, privacy, or clinical trust.

This shift is being accelerated by the entry of major AI platform players such as OpenAI, Claude (Anthropic), and Groq, each bringing a different but complementary capability to the healthcare ecosystem—from secure clinical copilots and life-sciences reasoning models to ultra-low-latency inference designed for real-time clinical workflows.

Three signals that GenAI healthcare is entering a more “enterprise-grade” era:

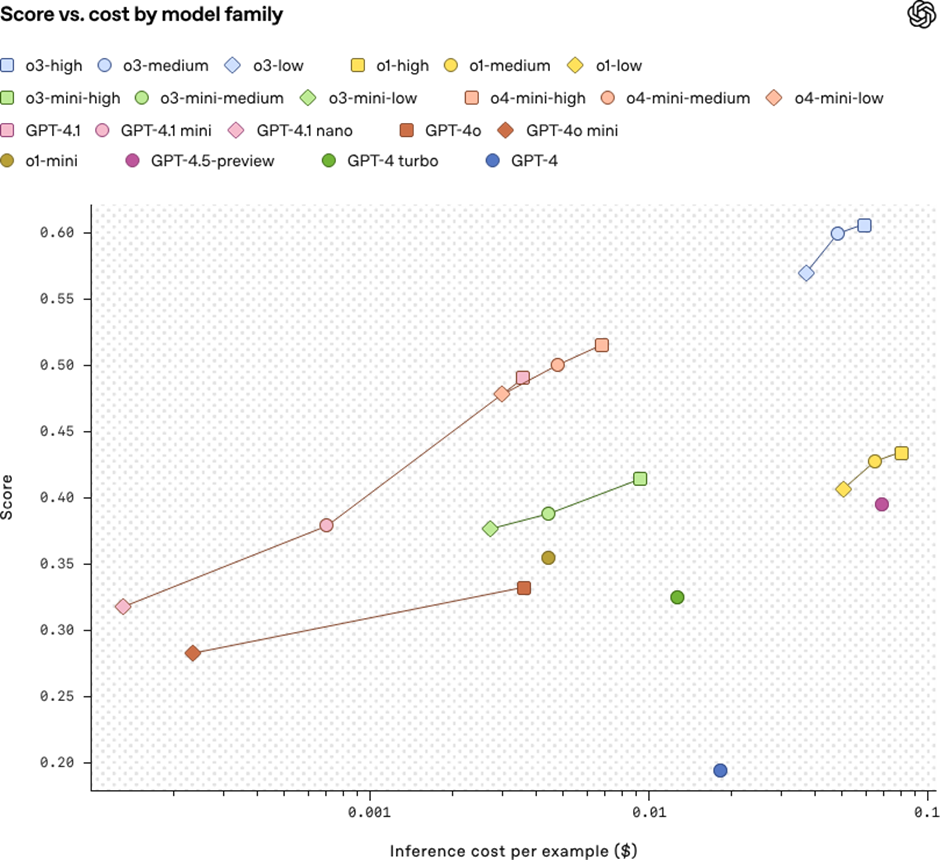

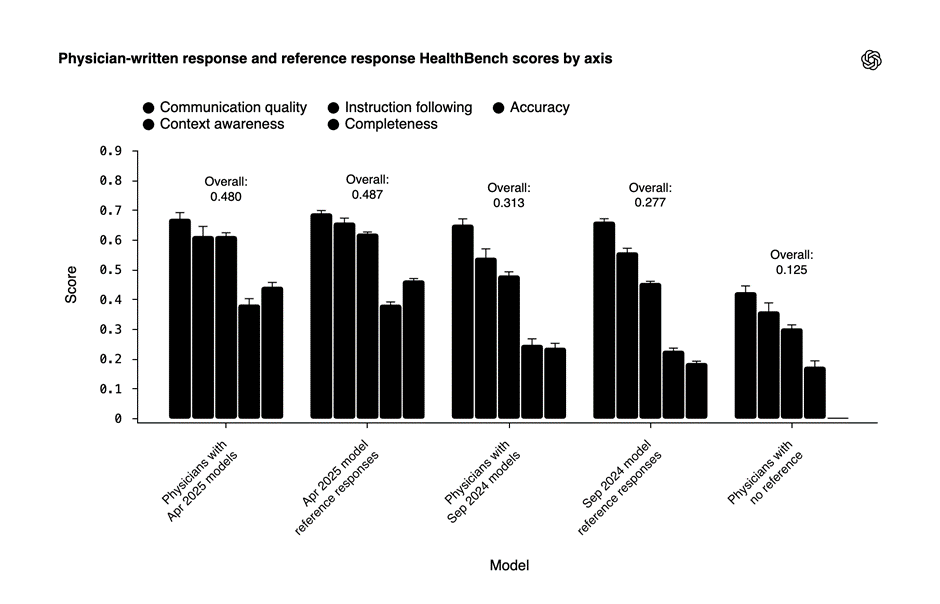

- OpenAI has introduced a dedicated healthcare offering (positioned around secure deployments and HIPAA-aligned use cases), plus published health-specific evaluation work like HealthBench.

- Anthropic (Claude) is publicly expanding into healthcare/life sciences via partnerships (e.g., Sanofi) and healthcare-focused initiatives/integrations.

- Groq is positioning itself as “inference infrastructure” for healthcare-grade workloads (including HIPAA-ready terms/controls and healthcare use-case messaging), and has been used in radiology reporting tooling (e.g., RADPAIR).

Early ROI signals reinforce this shift toward production-grade GenAI.

Across the industry, survey data shows that AI agent–driven workflows are already delivering measurable returns. In healthcare, the strongest ROI is emerging in tech support and patient experience functions—each cited by roughly one-third of respondents—reflecting the value of automating high-volume, non-clinical interactions.

In life sciences, the impact skews upstream: executives report the largest gains in product innovation and design, followed closely by marketing and automated document processing. The pattern is consistent across both domains—GenAI delivers the fastest returns where workflows are text-heavy, repetitive, and constrained by human throughput rather than clinical judgment.

In this article, we break down 15 high-impact generative AI healthcare use cases, with practical examples that show how organizations are moving from experimentation to production—while navigating regulatory, ethical, and operational realities unique to healthcare.

1) Ambient clinical documentation (AI scribe)

Theory: Ambient Clinical Documentation as Cognitive Offloading

Ambient clinical documentation reframes note-taking from a manual afterthought into a background cognitive service. Instead of clinicians acting as data-entry clerks during or after patient encounters, generative AI systems continuously capture conversational context and transform it into structured clinical artifacts.

The core idea is cognitive offloading: clinicians focus on diagnostic reasoning and patient interaction, while the system absorbs, organizes, and drafts the clinical record. In a healthcare system already strained by workforce shortages, this is not a productivity gain—it’s a burnout mitigation strategy. Multiple studies have shown that documentation burden is one of the strongest predictors of clinician dissatisfaction and attrition.

AI Under the Hood: From Audio Streams to EHR-Ready Artifacts

Underneath the surface, ambient documentation systems are not “just transcription.”

A typical pipeline includes:

- Real-time speech-to-text optimized for clinical vocabularies

- Speaker diarization (clinician vs patient vs caregiver)

- Medical entity extraction (symptoms, conditions, medications, dosages)

- Temporal reasoning (past history vs current complaint vs plan)

- Note synthesis into standardized formats (SOAP, H&P, discharge summary)

Modern implementations rely heavily on retrieval-augmented generation (RAG) to ground outputs in institutional templates and specialty-specific note structures. Crucially, outputs are generated as drafts, not final records—enabling clinician review, edit, and attestation, which is essential for medico-legal integrity.

Generative AI Example: Clinical Copilots in Production

OpenAI has publicly highlighted clinical-copilot style deployments that operate in secure, healthcare-aligned environments—most notably its collaboration with Penda Health, where generative models assist clinicians by drafting visit notes and summaries while maintaining human oversight.

What’s important here is not the model itself, but the operating constraints:

- PHI-isolated environments

- No autonomous clinical decision-making

- Explicit clinician approval before EHR submission

This signals a shift away from “AI replaces clinicians” narratives toward AI augments clinical throughput without eroding accountability.

Practical Examples: Where This Is Working Today

Example 1: Primary Care & Outpatient Clinics (Vendor-Centric)

In outpatient settings, ambient AI scribes are being deployed to generate SOAP notes within minutes of visit completion, reducing after-hours documentation (“pajama time”) by 30–50% in early pilots.

- Clinicians conduct visits naturally

- Notes are auto-drafted in the background

- Providers review, edit, and sign off

This model has shown especially strong adoption in family medicine and internal medicine, where visit volumes are high and documentation patterns are repetitive.

Example 2: Enterprise Health Systems & EHR-Integrated Scribes

Large health systems are integrating ambient documentation directly into EHR workflows (Epic- and Cerner-compatible environments), where AI-generated drafts appear as pre-filled encounter notes.

Key outcomes reported:

- Reduced documentation turnaround time

- Improved clinician-patient eye contact during visits

- Higher note consistency across providers

Importantly, these deployments succeed only when paired with:

- Human-in-the-loop validation

- Full audit trails

- Strict access controls and PHI governance

Failures tend to occur when organizations attempt to bypass review steps in pursuit of speed.

Why This Use Case Is the Canary in the Coal Mine

Ambient clinical documentation is often the first GenAI healthcare workload to move into production because:

- ROI is immediate and measurable

- Clinical risk is manageable with oversight

- Workflow integration is straightforward

For this reason, it has become the bellwether use case for whether a healthcare organization is truly ready for generative AI—not in theory, but in practice.

2) Clinician “copilot” for medical knowledge + evidence lookup (RAG)

THEORY: Evidence Compression at the Speed of Care

Clinician copilots are not about “asking ChatGPT medical questions.” They are about compressing medical evidence into the clinician’s decision window.

Modern clinicians operate under extreme cognitive load: evolving guidelines, expanding drug formularies, specialty-specific protocols, and institution-level policies—all while managing time-constrained patient encounters. Traditional clinical decision support systems (CDSS) interrupt workflows with alerts and static links. Clinician copilots invert that model by acting as on-demand evidence synthesizers, delivering context-aware answers inside the clinical flow.

The value proposition is not automation of judgment, but latency reduction between question and evidence—turning minutes of searching into seconds of grounded insight, without displacing clinician authority.

AI UNDER THE HOOD: Retrieval-Augmented Clinical Reasoning

Clinician copilots are built on retrieval-augmented generation (RAG), not open-ended generation.

Core components include:

- Curated knowledge ingestion (clinical guidelines, peer-reviewed journals, drug databases, institutional protocols)

- Semantic retrieval tuned for clinical terminology

- Citation-bound generation, where every answer is traceable to source documents

- Prompt and output constraints to prevent diagnosis or treatment directives

- Session logging and auditability for compliance and clinical governance

Unlike consumer chatbots, these systems are intentionally epistemically constrained—they are designed to say “here is the evidence”, not “here is what to do.” This distinction is foundational for safe deployment in regulated care environments.

GEN AI EXAMPLE: Secure Clinical Copilots in Practice

OpenAI positions “ChatGPT for Healthcare” as a secure, enterprise-grade workspace for clinicians to query medical knowledge and receive cited, evidence-backed responses. The emphasis is on trusted-source grounding, PHI-safe environments, and auditable interactions—marking a shift from general-purpose LLMs to workflow-native clinical knowledge support.

These deployments explicitly avoid autonomous clinical decision-making, reinforcing the model of GenAI as cognitive augmentation, not clinical authority.

Source: OpenAI healthcare product positioning and public disclosures

PRACTICAL EXAMPLES: Where Clinician Copilots Deliver Real Value

Practical Example 1: Evidence Lookup During Clinical Rounds

During inpatient rounds, clinicians frequently need to validate guideline recommendations, drug contraindications, or comparative treatment evidence in real time. Clinician copilots allow providers to query evidence conversationally—without leaving the EHR or disrupting team flow.

Impact observed in pilots:

- Reduced reliance on memory under pressure

- Fewer workflow interruptions

- Faster alignment with evidence-based care pathways

This use case is particularly valuable in oncology, infectious disease, and critical care, where guidelines evolve rapidly.

Source: Health system clinical decision-support pilots; enterprise provider workflow studies

Practical Example 2: Specialty-Specific Knowledge Copilots (Vendor-Centric)

Health systems and healthtech vendors are deploying specialty-tuned copilots—for oncology, cardiology, and rare diseases—grounded exclusively in approved protocols and curated literature. These copilots assist clinicians in exploring differential considerations, drug–drug interactions, and guideline alignment while preserving clinician interpretation and final decision-making.

By limiting the knowledge domain, these systems achieve higher trust, lower hallucination risk, and better clinical adoption.

Source: Enterprise healthtech deployments; OpenAI healthcare use case discussions

Why This Use Case Matters More Than It Appears

Clinician copilots are often underestimated because they don’t “do” clinical actions. In reality, they reshape how clinical reasoning is supported at scale. They reduce cognitive drag, standardize evidence access, and quietly elevate the floor of decision quality—without triggering regulatory red flags.

As a result, clinician copilots are becoming one of the fastest paths from GenAI pilot to production in healthcare—precisely because they respect the boundary between information and judgment.

3) Prior authorization and payer documentation drafting

THEORY: Translating Clinical Intent into Payer-Readable Logic

Prior authorization is not a clinical problem—it is a translation problem.

Clinicians think in terms of diagnosis, intent, and patient context. Payers operate on policy logic, codes, and documentation sufficiency. The friction between these two systems is where delays, denials, and revenue leakage occur. Generative AI addresses this gap by acting as a semantic bridge—translating clinical records into payer-aligned narratives that satisfy policy requirements without forcing clinicians into administrative workflows.

The real value of GenAI here is not automation for speed alone, but predictability: reducing ambiguity, rework, and avoidable denials in one of healthcare’s most operationally brittle processes.

AI UNDER THE HOOD: Policy-Aware Narrative Generation

Prior-authorization automation relies on policy-grounded generation, not free-form text.

Core components typically include:

- Policy ingestion and versioning (payer rules, coverage criteria, medical necessity language)

- Structured data extraction (diagnosis codes, procedure codes, clinical indicators)

- Rule-to-narrative mapping, where extracted data is aligned to payer-specific requirements

- Checklist completion logic, ensuring all mandatory fields and attachments are present

- Explainability layers, enabling trace-back from generated text to source documentation

Generative AI is used to synthesize coherent, payer-readable narratives, while deterministic logic validates codes, criteria, and completeness—making the output defensible during audits, appeals, and disputes.

GEN AI EXAMPLE: Workflow-Orchestrated Prior Auth Drafting

Modern healthcare AI platforms increasingly position prior authorization as an orchestrated workflow, where GenAI drafts letters and justifications while upstream and downstream systems handle eligibility checks, coding validation, and submission routing.

In these stacks, GenAI is applied specifically where humans struggle most:

- Interpreting payer policies

- Translating charts into medical-necessity language

- Maintaining consistency across submissions

This approach reflects a broader shift toward administrative GenAI—high ROI, low clinical risk, and immediate operational impact.

Source: Healthcare workflow automation platform disclosures; payer–provider admin automation case studies

PRACTICAL EXAMPLES: Where This Delivers Immediate ROI

Practical Example 1: Health Systems Reducing Authorization Turnaround Time

Large provider organizations deploy GenAI to pre-draft prior-auth letters directly from EHR data, flag missing documentation, and align narratives with payer-specific criteria before submission.

Observed outcomes in pilots:

- Faster first-pass submission readiness

- Reduced manual rework by revenue cycle teams

- Fewer “incomplete documentation” denials

This shifts prior authorization from a reactive clean-up task to a front-loaded, quality-controlled workflow.

Source: Revenue cycle management (RCM) transformation reports; provider admin automation pilots

Practical Example 2: Appeals & Denial Management (Vendor-Centric)

When authorizations are denied, GenAI systems assist by generating appeal narratives that directly reference payer policy language, documented clinical evidence, and historical approval patterns.

Instead of manual letter rewriting, teams receive:

- Policy-aligned appeal drafts

- Clear rationale mapping

- Faster resubmission cycles

This significantly improves throughput in high-volume specialties such as imaging, orthopedics, and oncology.

Source: Payer operations disclosures; healthcare admin AI vendor case studies

Why This Use Case Is Strategically Underrated

Prior authorization is one of the least visible but most expensive friction points in healthcare. Because it sits between clinical care and reimbursement, it often escapes innovation focus. Generative AI changes that by turning prior auth into a computationally solvable problem—structured, auditable, and improvable over time.

For many organizations, this becomes the first GenAI use case to reach production, not because it is flashy, but because it is operationally unavoidable.

4) Revenue Cycle Management (RCM): coding assistance & claim narrative generation

THEORY: Turning Clinical Reality into Reimbursable Truth

Revenue Cycle Management breaks down at the seam between care delivery and financial representation.

Clinicians document care for continuity and safety. Payers reimburse based on coded abstractions and narrative sufficiency. The distance between those two representations is where undercoding, denials, and revenue leakage accumulate. Generative AI addresses this gap by acting as a semantic compiler—translating rich, unstructured clinical encounters into claim-ready narratives that accurately reflect services rendered.

The strategic value here is not aggressive coding. It is coding completeness and narrative fidelity—ensuring the claim mirrors clinical reality closely enough to withstand payer scrutiny while maximizing legitimate reimbursement.

AI UNDER THE HOOD: Semantic Compression with Compliance Guardrails

RCM-focused GenAI systems are designed around structured summarization, not autonomous billing.

Key technical components include:

- Encounter summarization across notes, orders, labs, and procedures

- Evidence-linked narrative generation, tying every claim statement back to chart text

- Code suggestion engines (ICD, CPT, DRG) operating as recommendations, not decisions

- Deterministic validation layers to catch mismatches, missing documentation, or unsupported codes

- Auditability and traceability, enabling compliance teams to review exactly how narratives were constructed

Generative AI handles the language and structure of claims, while rules engines and humans retain authority over submission and compliance. This division is why RCM has become one of the safest early GenAI deployments in healthcare.

GEN AI EXAMPLE: Early-ROI GenAI in Production RCM Stacks

RCM has emerged as one of the most common early-ROI GenAI use cases precisely because outcomes are measurable: faster claim preparation, improved first-pass acceptance, and reduced manual rework.

In modern RCM stacks, GenAI is used to:

- Draft claim narratives aligned to payer expectations

- Surface likely codes based on documented services

- Highlight documentation gaps before submission

These systems operate upstream of billing submission, giving coding and compliance teams higher-quality starting material rather than replacing expert judgment.

Source: RCM platform disclosures; healthcare financial operations case studies

PRACTICAL EXAMPLES: Where GenAI Changes the Unit Economics

Practical Example 1: Hospital Billing Teams Improving First-Pass Yield

Large provider organizations deploy GenAI to generate claim-ready summaries immediately after encounters, reducing back-and-forth between clinicians and coding teams.

Observed impacts in pilots:

- Shorter coding turnaround times

- Fewer clarification queries sent back to providers

- Higher first-pass claim acceptance rates

By resolving ambiguity early, GenAI shifts revenue cycle work left, where it is cheaper and faster to fix.

Source: Hospital RCM transformation initiatives; provider finance optimization pilots

Practical Example 2: Specialty Practices Managing Complex Coding (Vendor-Centric)

Specialty practices (e.g., cardiology, orthopedics, oncology) use GenAI-assisted coding to manage high documentation complexity and frequent payer scrutiny.

GenAI helps by:

- Drafting detailed, evidence-linked narratives

- Suggesting code combinations aligned with documented care

- Flagging underdocumentation risks before submission

This improves both compliance posture and revenue predictability without encouraging upcoding.

Source: Specialty RCM vendor implementations; practice management case studies

Why Revenue Cycle Operations Are GenAI’s Fastest Path to Production Value

RCM succeeds early with GenAI because:

- The workflows are repetitive and text-heavy

- Outcomes are financially measurable

- Clinical risk is indirect and controllable

- Human oversight is already embedded in the process

As a result, RCM often becomes the proof point leadership teams use to justify broader GenAI investment across clinical and operational domains.

5) Call-center and patient access automation (scheduling, benefits, FAQs)

THEORY: Front-Door Automation Without Clinical Risk

Patient access is healthcare’s highest-volume, lowest-margin interaction layer—and one of its most fragile.

Scheduling calls, eligibility checks, benefits questions, and intake routing consume massive operational bandwidth, yet add little clinical value. The core challenge is not intelligence, but scale without error. Generative AI enters this layer not as a clinician substitute, but as a front-door traffic controller—handling predictable, non-diagnostic interactions so human staff can focus on exceptions and care coordination.

The strategic insight: patient access automation succeeds when GenAI is treated as administrative infrastructure, not conversational medicine. This boundary is what enables adoption without triggering regulatory or safety backlash.

AI UNDER THE HOOD: Policy-Constrained Conversational Systems

Patient-facing GenAI agents are deliberately constrained systems, architected for safety over expressiveness.

Key components include:

- Intent classification (scheduling, billing, benefits, referrals, escalation)

- Policy-constrained response generation, often using templated or semi-structured replies

- Eligibility and benefits lookups via payer and internal systems

- Conversation state management to preserve continuity across handoffs

- Hard escalation triggers for clinical language, ambiguity, or patient distress

Unlike clinical copilots, these agents are designed to stop early and escalate often. Their success depends on knowing when not to respond—an inversion of typical chatbot optimization.

GEN AI EXAMPLE: Purpose-Built, Non-Diagnostic Agents

The healthcare AI market has seen the rise of purpose-built, non-diagnostic agents explicitly designed for patient access and engagement. Companies like Hippocratic AI position their systems around safety-first principles: no diagnosis, no treatment advice, and strict escalation protocols.

This positioning reflects a broader industry shift away from general-purpose chatbots toward domain-limited conversational agents that align with healthcare’s regulatory and ethical constraints.

Source: Healthcare AI market positioning; Hippocratic AI public disclosures

PRACTICAL EXAMPLES: Where Patient Access Automation Scales Safely

Practical Example 1: Scheduling & Intake at Scale

Health systems deploy GenAI agents to handle appointment scheduling, rescheduling, intake questionnaires, and referral routing across phone, chat, and web channels.

Operational outcomes observed in pilots:

- Reduced call wait times

- Higher appointment completion rates

- Lower staff burnout in front-desk teams

Human agents intervene only when scheduling conflicts, clinical questions, or complex insurance scenarios arise.

Source: Health system patient access automation pilots; contact center optimization studies

Practical Example 2: Eligibility, Benefits, and FAQs (Vendor-Centric)

Patient access agents are increasingly used to answer high-volume questions about insurance coverage, copays, deductibles, and benefits eligibility—areas that traditionally overwhelm call centers.

GenAI agents:

- Retrieve payer-specific benefits information

- Deliver templated, compliant responses

- Route complex or ambiguous cases to billing specialists

This dramatically reduces repetitive call load while maintaining accuracy and compliance.

Source: Revenue cycle and patient access vendor case studies

Why Patient Access Is a Strategic Entry Point for GenAI

Patient access automation works because:

- Interactions are highly repetitive

- Clinical risk is low and controllable

- ROI is immediate and visible

- Safety constraints are architecturally enforceable

As a result, patient access often becomes the first patient-facing GenAI system to reach production—setting expectations for how generative AI should behave in healthcare: helpful, bounded, and accountable.

6) Patient-friendly lab and imaging result explanations

THEORY: Translating Clinical Signal into Human Comprehension

Lab and imaging results are clinically precise—but experientially opaque.

Patients receive numbers, ranges, flags, and radiology impressions written for clinicians, not humans. This creates anxiety, misinterpretation, and unnecessary follow-up calls—often before a physician has had a chance to contextualize the results. Generative AI addresses this gap by acting as a semantic interpreter, converting clinical signal into patient-comprehensible narratives without crossing into diagnosis or treatment.

The goal is not reassurance or medical advice. It is comprehension scaffolding—helping patients understand what the result represents, why it exists, and what questions are appropriate to ask next.

AI UNDER THE HOOD: Guardrailed Clinical-to-Consumer Translation

Patient-facing result explanation systems are among the most tightly constrained GenAI deployments in healthcare.

Core technical elements include:

- Structured result ingestion (labs, imaging impressions, reference ranges)

- Contextual normalization (age, sex, test type, historical trends)

- Clinician-approved content blocks mapped to result categories

- Retrieval-augmented generation (RAG) grounded in validated medical explanations

- Safety filters and disclaimers that explicitly avoid diagnosis, prognosis, or treatment advice

Outputs are framed in probabilistic, educational language (“this can sometimes indicate…”) and are intentionally designed to defer authority back to the clinician, not replace it.

GEN AI EXAMPLE: Record-Connected Explanations at Scale

Anthropic’s HealthEx integration describes the use of generative AI to produce record-connected summaries and explanations of health information, including lab and diagnostic results. The emphasis is on clarity, contextualization, and safe language—transforming raw medical data into explanations patients can understand without inducing false certainty or panic.

By operating directly against structured health records, these systems avoid generic explanations and instead tailor responses to the specific test and context.

Source: Anthropic HealthEx integration disclosures

PRACTICAL EXAMPLES: Where This Improves Experience Without Risk

Practical Example 1: Patient Portals Reducing Anxiety and Call Volume

Health systems integrate GenAI explanations directly into patient portals, where lab results are released automatically. Alongside numeric values, patients receive plain-language summaries explaining:

- What the test measures

- Why it is commonly ordered

- Whether results are within or outside typical ranges

- Questions to discuss with their clinician

Observed outcomes in pilots:

- Lower inbound calls to care teams

- Reduced patient anxiety between result release and follow-up

- Higher patient satisfaction with digital access tools

Source: Health system patient portal enhancement pilots; digital experience studies

Practical Example 2: Imaging Results for Non-Clinical Audiences (Vendor-Centric)

Radiology reports are among the most intimidating patient documents. GenAI systems generate patient-safe imaging summaries that explain findings (e.g., “no acute abnormalities,” “age-related changes”) without interpretation beyond approved language.

These summaries:

- Mirror radiologist-approved phrasing

- Avoid speculative language

- Reinforce that the ordering physician will provide final interpretation

This improves transparency while preserving clinical authority.

Source: Diagnostic imaging vendor implementations; patient experience modernization initiatives

Why This Use Case Is a Trust Multiplier

Patient-friendly explanations are not about automation efficiency—they are about trust preservation. When patients understand their data, they are less likely to misinterpret, overreact, or disengage. Generative AI succeeds here precisely because it is restrained, contextual, and deferential to clinician judgment.

As healthcare organizations expand digital access, this use case often becomes the first GenAI feature patients encounter—making it a defining moment for how safe, human, and responsible AI feels in care delivery.

BUYER SIGNALS: WHO OWNS, BUYS, AND FUNDS EACH USE CASE

| Use Case | Primary Buyer / Owner | Budget Origin | Trigger Signals | Buying Motion |

| Ambient Clinical Documentation (AI Scribe) | CMIO; Chief Medical Officer (CMO) | Clinical operations; provider productivity; innovation pilots | Rising clinician burnout; excessive after-hours documentation (“pajama time”); low clinician satisfaction scores | Bottom-up pilot → enterprise rollout; strong clinician advocacy required |

| Clinician Copilot (Medical Knowledge & Evidence Lookup – RAG) | CMIO; Chief Quality Officer (CQO) | Clinical quality & safety; medical education; IT innovation | Variability in guideline adherence; high cognitive load in complex specialties; dissatisfaction with alert-based CDSS | Controlled specialty pilots; governance-heavy rollout via clinical committees |

| Prior Authorization & Payer Documentation Drafting | VP Revenue Cycle; Director of Utilization Management | Revenue integrity; denials management; admin automation | High prior-auth denial rates; long authorization turnaround times; clinician frustration with admin work | Ops-led purchase with CFO visibility; rapid scale once denial reduction is proven |

| Revenue Cycle Management (RCM): Coding Assistance & Claim Narratives | CFO; VP Revenue Cycle / Revenue Integrity | Finance; revenue optimization; compliance & audit | Rising DNFB days; coding backlogs; increased payer audits or clawbacks | CFO-sponsored pilots; strong ROI accelerates enterprise adoption |

| Call-Center & Patient Access Automation | COO; VP Patient Access / Contact Center Ops | Operations; patient experience (CX); cost containment | Long call wait times; high call abandonment; staffing shortages | Rapid ops-led deployment; vendor safety boundaries critical |

| Patient-Friendly Lab & Imaging Result Explanations | Chief Patient Experience Officer; CMIO (shared) | Digital front door; patient engagement; CX modernization | High inbound calls after lab release; poor portal engagement; patient confusion complaints | Digital-first rollout via patient portals; clinician-approved content required |

| Post-Discharge Follow-Ups & Care Plan Reinforcement | VP Care Management; Population Health Leader | Value-based care programs; readmission reduction; care coordination | Elevated 30-day readmission rates; poor medication adherence; follow-up gaps | Outcomes-driven pilots; expansion tied to measurable readmission reduction |

Emerging / Secondary Production Use Cases

7) Post-discharge follow-ups and care plan reinforcement

What it does: Automated messages that summarize discharge instructions and check adherence barriers (“Did you pick up meds?”).

Example: This often improves outcomes by closing the “forgotten instructions” gap.

Implementation notes: Personalized but policy-safe; multilingual support; escalation for red-flag responses.

8) Clinical trial matching (patients ↔ trials)

What it does: Reads EHR notes + structured fields to match inclusion/exclusion criteria; generates a rationale.

Example: High-value because it speeds recruitment and increases trial access.

Implementation notes: Strong governance on data access; explainable match rationale; avoid “guaranteed eligibility” language.

9) Trial site operations: protocol summarization & deviation detection

What it does: Summarizes trial protocols into actionable checklists; flags potential deviations from notes.

Example: Life sciences organizations increasingly use GenAI to improve efficiency across the value chain.

Implementation notes: Version control for protocols; human QA; strong traceability.

10) Pharmacovigilance: adverse event (AE) intake and narrative creation

What it does: Converts unstructured reports (call logs, emails, notes) into structured AE cases; drafts MedDRA-like summaries for review.

Example: GenAI helps scale safety ops without losing consistency.

Implementation notes: Strict review workflows; consistent terminology mapping; robust de-identification where appropriate.

11) Medical imaging workflow support (radiology reporting drafts)

What it does: Generates report drafts from dictation and structured findings; assists with edits and consistency.

Example: RADPAIR’s radiology reporting product has publicly referenced being “powered by Groq” for fast inference.

Implementation notes: Radiologist final sign-off; quality gates for hallucinations; integrate with PACS/RIS.

12) Pathology and genomics report summarization (clinician + patient versions)

What it does: Creates dual reports: clinical summary (technical) and patient summary (plain language).

Example: Especially useful for complex molecular panels and long pathology narratives.

Implementation notes: Use controlled vocab + references; never invent variants; highlight uncertainty and next steps.

13) Hospital ops: bed management, staffing notes, incident summaries

What it does: Summarizes shift handoffs, incident reports, and operational logs; drafts “morning briefing” memos.

Example: High ROI because it reduces coordination overhead.

Implementation notes: Access control by role; redact sensitive identifiers; maintain audit logs.

14) Medical education and simulation (case generation + OSCE-style practice)

What it does: Creates realistic patient cases, differential prompts, and feedback rubrics—great for training.

Example: OpenAI’s HealthBench reflects the push toward structured evaluation of model behavior in health contexts.

Implementation notes: Avoid reinforcing unsafe practices; ensure curriculum alignment; physician review.

15) Personal health “workspace” and record-connected assistants

What it does: A secure place for users to organize records, prepare for visits, summarize history, and generate question lists.

Example: OpenAI has launched a healthcare-oriented offering and has reported on health-related usage patterns and access challenges (“hospital deserts”).

Implementation notes: Consent-driven access; minimize retention; clear boundaries (support, not diagnosis).

BUYER SIGNALS FOR EMERGING USE CASES: WHO OWNS, BUYS, AND FUNDS EACH USE CASE

| Use Case | Primary Buyer / Owner | Budget Origin | Trigger Signals | Buying Motion |

| Post-Discharge Follow-Ups & Care Plan Reinforcement | VP Care Management; Population Health Leader | Value-based care; readmission reduction; care coordination | High 30-day readmissions; medication non-adherence; missed follow-ups | Outcomes-driven pilots; expansion tied to readmission reduction and quality metrics |

| Clinical Trial Matching (Patients ↔ Trials) | Head of Clinical Research; VP Clinical Operations | R&D; clinical trials; life sciences partnerships | Slow recruitment; high screen-failure rates; low trial diversity | Research-led pilots; scale after measurable recruitment acceleration |

| Trial Site Operations: Protocol Summarization & Deviation Detection | Director of Clinical Trial Ops; QA / Compliance Lead | Trial operations; QA; regulatory compliance | Frequent protocol deviations; high monitoring costs; audit readiness pressure | Ops-led adoption; expansion after deviation reduction is proven |

| Pharmacovigilance: AE Intake & Narrative Creation | Head of Drug Safety; Pharmacovigilance Lead | Drug safety; post-market surveillance; compliance | AE processing backlogs; regulatory reporting deadlines; growing safety volumes | Compliance-driven rollout; cautious scale post-regulatory validation |

| Medical Imaging Workflow Support (Radiology Reporting Drafts) | Chair of Radiology; CIO (shared) | Imaging ops; radiology productivity; IT modernization | Radiologist burnout; report turnaround delays; imaging volume growth | Specialty-led pilots; expansion after productivity and TAT gains |

| Pathology & Genomics Report Summarization | Chair of Pathology; Molecular Diagnostics Lead | Precision medicine; lab modernization; oncology programs | Long, complex reports; clinician confusion; patient comprehension gaps | Specialty-first pilots; scale after clinician trust is established |

| Hospital Operations: Bed Management, Staffing & Incident Summaries | COO; VP Hospital Operations | Operations excellence; capacity management; cost containment | Bed shortages; staffing inefficiencies; poor shift handoffs | Ops-led deployment; rapid scale if throughput improves |

| Medical Education & Simulation (Case Generation, OSCE Practice) | Dean of Medical Education; GME Director | Medical education; training innovation; simulation labs | Faculty shortages; inconsistent training quality; OSCE prep burden | Academic pilots; scale with curriculum adoption |

| Personal Health “Workspace” & Record-Connected Assistants | Chief Digital Officer; VP Consumer Health | Digital front door; patient engagement; consumer platforms | Low portal engagement; fragmented patient records; access inequity | Digital-led rollout; gradual scale tied to engagement and trust metrics |

Why OpenAI, Claude, and Groq entering healthcare matters

OpenAI: productization + evaluation maturity

OpenAI is explicitly packaging healthcare offerings (security/compliance posture) and also investing in health-specific benchmarks and clinical copilot narratives, which pushes the market toward measurable quality and safer deployment patterns.

Claude (Anthropic): life sciences + healthcare integrations

Anthropic is highlighting healthcare/life sciences partnerships (e.g., Sanofi) and record-connected experiences (via partners), signaling “enterprise rollout + workflow” rather than hobbyist usage.

Groq: the “inference layer” for real-time clinical workflows

Groq’s healthcare stance is less “model” and more fast, scalable inference with healthcare-grade controls (e.g., HIPAA-ready terms) and examples in radiology reporting systems—important because many clinical workflows require low latency and predictable uptime.

Conclusion: From Model Demos to Clinical-Grade Systems

The simultaneous entry of OpenAI, Claude, and Groq into healthcare marks a structural inflection point, not a feature upgrade. What’s emerging is a full-stack generative AI healthcare substrate—one that spans model intelligence, clinical reasoning, evaluation rigor, and real-time inference infrastructure.

OpenAI’s emphasis on healthcare productization, clinical benchmarks, and safety-aligned copilots signals a maturation of GenAI from probabilistic text generation into measurable, auditable clinical support systems. This matters because healthcare does not reward novelty; it rewards reproducibility, traceability, and risk containment. Health-specific evaluations, constrained prompting, and governance-first deployments are quietly redefining what “production-ready” actually means in regulated care environments.

Claude’s expansion into life sciences cognition and record-connected workflows underscores a parallel shift: generative models are no longer peripheral assistants, but embedded reasoning layers inside enterprise healthcare stacks. When large language models begin operating directly against longitudinal patient records, trial protocols, and pharmacological datasets, the value moves upstream—from UI novelty to decision-context compression across R&D, clinical operations, and patient communication.

Groq completes this triangle by addressing the often-overlooked bottleneck: inference latency and determinism. In healthcare, milliseconds matter—not only for radiology but also for conversational triage, ambient documentation, and real-time clinical decision support. Groq’s positioning as a healthcare-grade inference fabric reframes GenAI not as a cloud novelty, but as always-on computational infrastructure capable of supporting continuous, safety-critical workflows.

Taken together, these three vectors—model intelligence, workflow-native integration, and ultra-low-latency inference—are converging into a new operating paradigm for healthcare systems. Generative AI is no longer an experimental overlay on EHRs and data warehouses; it is becoming a cognitive orchestration layer that mediates how clinicians document, how patients understand care, how trials recruit, and how health systems scale expertise.

The next phase of generative AI in healthcare will not be defined by bigger models or flashier demos, but by clinical reliability, epistemic restraint, and infrastructure resilience. Organizations that recognize this shift early—and architect for safety, governance, and performance—will move beyond pilots into durable advantage. The rest will remain stuck optimizing prompts while the industry quietly rebuilds itself underneath them.

Frequently Asked Questions (FAQs)

1. How is generative AI in healthcare different from traditional clinical AI models?

Traditional clinical AI is largely discriminative—it classifies, predicts, or flags anomalies based on predefined labels. Generative AI, by contrast, operates as a context-aware synthesis engine, capable of producing structured clinical notes, patient-facing explanations, protocol summaries, and operational narratives. The shift is from point predictions to longitudinal reasoning across unstructured clinical corpora, enabling workflows that were previously manual, fragmented, or economically infeasible.

2. Can generative AI be safely deployed in regulated healthcare environments?

Yes—but only when deployed with healthcare-grade guardrails. Production GenAI systems require retrieval-augmented generation (RAG) over curated medical sources, human-in-the-loop validation for clinical outputs, strict PHI isolation, and continuous evaluation against domain-specific benchmarks. Platforms like OpenAI, Claude, and Groq are pushing the ecosystem toward auditable, constraint-driven deployments rather than unconstrained general-purpose models.

3. Where does generative AI deliver the fastest ROI in healthcare today?

The fastest ROI emerges in administrative and workflow-heavy domains: ambient clinical documentation, revenue cycle management, prior authorization drafting, patient access automation, and trial operations. These use cases are high-volume, text-intensive, and error-prone—ideal conditions for generative systems that excel at semantic compression and structured output generation without direct clinical decision-making.

4. Why is inference latency such a critical factor for GenAI healthcare applications?

Healthcare workflows increasingly demand real-time or near-real-time responses—from radiology reporting and ambient scribing to conversational triage and clinician copilots. High latency introduces cognitive friction and workflow abandonment. This is why inference-focused platforms like Groq are strategically important: they enable deterministic, low-latency generation, which is essential for safety, adoption, and clinician trust in live clinical settings.

5. What will define the next generation of generative AI healthcare platforms?

The next generation will be defined less by model size and more by clinical reliability, governance maturity, and workflow embeddedness. Winning platforms will combine domain-specific evaluation, explainable outputs, privacy-preserving architectures, and infrastructure capable of sustained, real-time inference. In short, generative AI in healthcare is evolving from a tool into a cognitive operating layer—one that augments human expertise without eroding clinical accountability.

To participate in our interviews, please write to our HealthTech Media Room at info@intentamplify.com

Recommended Article: